There’s good and bad news for you when it comes to artificial intelligence, the technology that, depending on who you ask, could power the 4th industrial revolution or accelerate humanity’s demise.

The first major report to look at the implications of AI for New Zealand in the coming decades paints a picture of a booming AI-driven economy, surprisingly few jobs lost to automation in the next 40 years and the potential to use the technology to tackle everything from inequality to environmental decline.

But New Zealand has no coherent strategy to manage use of AI, our business leaders aren’t factoring it into their planning to a great degree and we have a shortage of AI researchers and skilled graduates to write the algorithms and design the machines of the future.

Even worse, our early forays into AI-driven decision making in government have been shaky and there’s no official oversight of the use of algorithms for predictive risk modelling and government decision making – let alone in the private sector.

“There’s no ethical framework around it which is really concerning for me,” says Clare Curran, Minister of Government Digital Services, who launched the AI Forum’s report Artificial Intelligence: Shaping Our Future, in Wellington last week.

“There’s also no economic framework around it as to what the economic benefits can be. Why is it so urgent? Because more than 140 organisations are already working with, or investing in AI in New Zealand”.

That’s right, hundreds of companies are heavily involved in artificial intelligence, from Auckland-based Soul Machines, which is making virtual intelligent assistants for the likes of Air New Zealand to Ohmio, which is building self-driving shuttles in Christchurch. Across the economy, 20 per cent of organisations surveyed by the AI Forum, from law firms to dairy farms, have adopted AI in some capacity.

Innovation is thriving, but in the face of huge investment from tech giants like Google and Microsoft as well as countries like China and the US, we risk being left behind in what is widely considered to be the transformational technology of the 21st century.

“This is the new electricity or the new internet,” says AI Forum Executive Director, Ben Reid.

“We’ve a growing body of evidence now that AI is a similarly transformational technology that will enable economic transformation within our lifetimes.”

Some have taken that to mean mass job destruction, the rise of robots and runaway algorithms with a mind of their own.

“Its often misrepresented with these science fiction scenarios, which aren’t really helping the popular conversation,” he admits.

How disruptive the change will be depends on the timeframe it occurs over and on that point there’s great uncertainty. There are also major technical barriers to overcome with AI before it can come close to true human-like intelligence.

AI’s impact – the report’s highlights

- AI could Increase GDP by up to $54 billion by 2035

- 1 million or 10% of jobs will be displaced by AI in the next 40 years

- Only 36% of company boards are discussing AI

- New Zealand ranks 9th among 35 OECD countries for Government AI Readiness

- New Zealand has 85 PhDs with an AI speciality of 22,000 worldwide

Source: AI Forum – See the report’s recommendations at http://www.aiforum.org.nz

AI – How did we get here?

Since Alan Turing, the tragic figure of Bletchley Park, wrote his seminal paper Computing Machinery and Intelligence, scientists have dreamt of developing machines that can think like humans do.

“People had this notion of making machines that were intelligent in human ways, that could understand and reason and perform similar types of tasks to humans,” says Jeff Dean, Google’s head of artificial intelligence who has been with the company since 1999 and contributed to its most successful products, such as Google Search and its advertising engine.

Starting in the 1950s, computer programmers attempted to make machines intelligent using hand-coded rules to define logical procedures. That got us a long way, including to the personal computer.

“But in the last 10 or 20 years, we’ve realised that it is very hard to encode everything about the world as logical rules,” says Dean.

New techniques were required to deal with the complexity of real world applications and the answer was machine learning, which has emerged as a significant field along with deep learning as computers have become more powerful and access to big pools of data has given machines the raw material to learn from.

“It is allowing machines to learn by making observations about the world in the same way that humans and other animals do by learning to recognise patterns. It is a better way than writing hand-coded rules,” says Dean.

The transition is well-illustrated by two iconic contests between humans and computers over the years. In 1997, IBM pitted its DeepBlue supercomputer against world chess champion Garry Kasparov – and won the chess tournament – by brute force computing.

“With DeepBlue they used chess-specific accelerated hardware and were able to make a computer that could play chess and actually beat Garry Kasparov,” says Dean.

It was considered a pivotal moment in the development of AI. But it was based on supercharged programming rules. In 2015, Google’s UK-based DeepMind division, which was co-founded by New Zealander computer scientist Shane Legg, tackled Go, an ancient Chinese strategy board game that is much more complicated than Chess.

“You need a system that can learn interesting patterns that occur during game play and to learn the intuitions that a human Go player has,” says Dean.

“Using machine learning, we can expose the system to games played by humans and learn to play Go from observations of those patterns.”

The AlphaGo program was the first to beat a professional Go player and went on to defeat Chinese world champion Ke Jie last year. It was more than a game. Google’s win hinted at how machine learning could make artificial intelligence much more useful.

In 2012, Dean had started experimenting with a subset of machine learning – neural networks.

“These are systems that are loosely inspired by how biological brains behave,” he explains.

“They are structured in layers that have individual neurons which recognise certain kinds of patterns.“

He and his colleagues fed 10 millions still images taken from Youtube videos into a neural network program powered by 16,000 computer processors.

“By exposing the model to thousands or millions of training examples, where humans have labeled what the correct answer is, it can learn very complicated functions,” says Dean.

The program then began identifying and categorising thousands of different objects on its own, each neuron in the digital brain responsible for identifying a particular attribute and talking to the other neurons to create a fuller picture.

On its own, in a telling reflection of what people upload to Youtube, it started identifying cats.

“We are going from a raw pixels of an image to the correct label of ‘cat’,” says Dean.

Machine learning and deep neural networks are now powering Google as it switches to becoming an “AI-first company”.

Everything from Gmail to Google Maps, Google Assistant to the camera in its Pixel smartphone, are using machine learning.

“Today we have seven products that have over a billion users. By improving all of those products using machine learning, we will be able to have a really big impact on more than a billion people,” says Dean.

But what Google, arguably the world’s leader in AI is working on, is “narrow AI”. It is a world away from general AI, which could mimic the more instinctive free form decision making of human beings.

Where are we going?

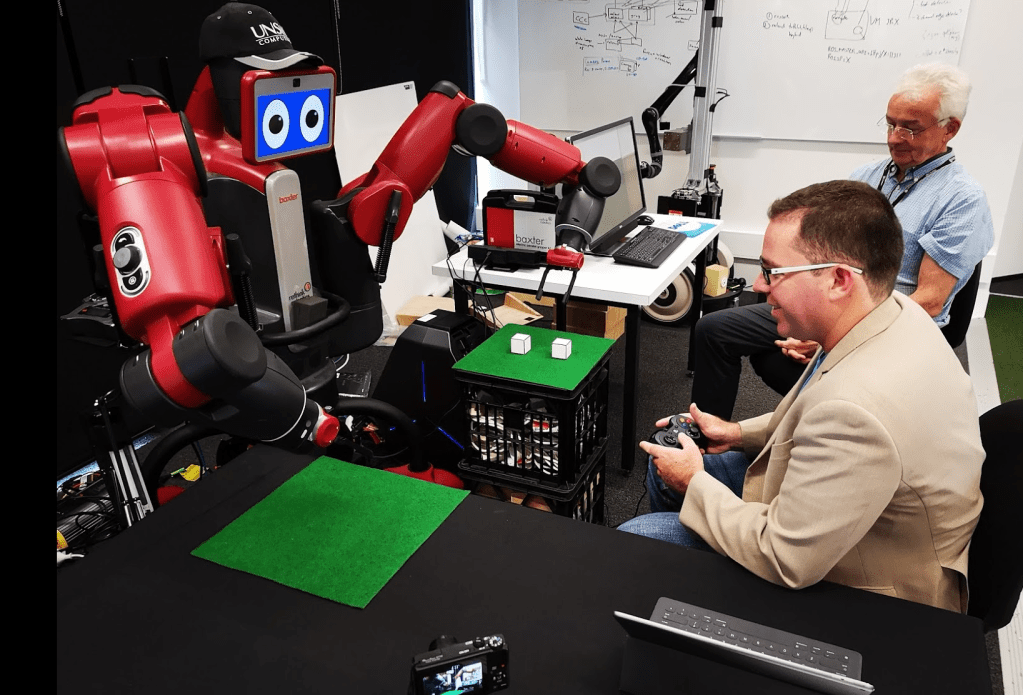

“We are still only building idiot savants that do one narrow task well,” says Toby Walsh, professor of artificial intelligence at the University of New South Wales and one of the few scientists to have a miniature football field in his lab for holding robo-soccer games.

AlphaGo was a milestone, but Google threw huge resources at what was still a very narrow task.

“We don’t have any idea how to build general intelligence,” says Walsh.

“We don’t have machines that are sentient, conscious, have desires of their own. They do what we tell them to do and that’s often the problem, they do what we tell them to do when we haven’t properly specified what we want them to do.”

Walsh has surveyed hundreds of his AI colleagues in academia asking them when they think we will create machines as intelligent as ourselves. The answer – 2062. He also undertook a survey of 500 members of the public, where the mean estimate was 2045.

“It suggests a mismatch in expectations,” says Walsh.

“They see the reports and think it is 20 years away at most. In fact it is 40 to 50 years.”

Google’s Director of Engineering and futurist Ray Kurzweil is on the public’s side – he claims the “singularity” will happen by 2045. Cosmologist Stephen Hawking increasingly warned of the risk AI poses to humans in the years leading up to his death in March. Silicon Valley entrepreneur Elon Musk, founder of Tesla and SpaceX, says only two things stress him out at the moment – trying to fix production issues with his Model 3 electric car, and the looming threat of AI.

“Musk is a fantastic engineer but he is not an AI researcher,” says Walsh.

“There are significant challenges ahead. It is incompetence not malevolence we’ve got to worry about.”

Dean agrees.

“They are very distant, far off fears,” he says.

“I do think as we start to deploy more machine learning and AI systems in the real world where they are taking actions that could have serious consequences, there are concrete safety problems in deploying them.”

Will artificial intelligence take your job?

The job destroying potential of artificial intelligence has created most of the angst around the technology. Various studies have predicted that up to half of all existing jobs could be rendered obsolete by algorithms and robots in the coming decades.

The AI Forum on the other hand, after analysing the literature from around the world and studying 18 sectors of industry in New Zealand, suggests as a worst case scenario that just a million jobs will be lost to AI over the next 40 years.

That’s just 10 per cent of the more than 10 million jobs that will be displaced by “normal market changes”.

Industries with a large workforce and high use of technology, such as manufacturing or the tech industry itself, are the most likely to see automation claim jobs. One of the least likely sectors is agriculture, which only employs a small sliver of the workforce and isn’t a huge user of technology.

“We’ve no idea whether more jobs will be created than destroyed,” argues Walsh.

“Unemployment is at record low levels, we’ve created lots of new jobs. But there’s no guarantee that will be true going forward.”

AI will plug gaps in the workforce where it is hard to find people to do the job.

“Automation is potentially solving some of the demographic challenges,” says Reid.

“Some industries such as healthcare, have aging workforces, where we are already seeing shortages of workers.”

Jeff Dean, not surprisingly as an AI engineer, is optimistic about the impact on work.

“Every time technology has displaced what was previously human labour, we’ve gone on to create new and interesting professions on top of this technology,” he says.

“We’ll have entirely new types of jobs we don’t even think of today. Who would have thought about social media consultants before the web existed?”

We have a mountain to climb to education and skill our younger generation for an AI-driven world. Those who have to retrain as their professions vanish, will need significant help too.

“The jobs that do get created will require different skills to the ones that are taken away,” says Walsh.

“We need to think about how we reskill people so they aren’t thrown onto the scrapheap and never get a job again.”

automatic weapons

Walsh was in Geneva, trying to convince United Nations diplomats from 123 countries to consider a moratorium on use of lethal autonomous weapons, when news broke of the most recent chemical attacks in Syria.

“In a macabre way it was very gratifying what was happening around chemical weapons in Syria, the way the world came together. Sanctions were imposed, diplomats were expelled,” he says.

As part of the Campaign to Stop Killer Robots, which is run by Wellingtonian Mary Wareham, he put it to the UN that use of autonomous weapons should receive similar treatment.

“Autonomous weapons will be terribly destabilising. Previously if you wanted to have a large army you had to be a superpower. Now you will just need some cheap robots,” says Walsh.

“The current balance of power could change dramatically.”

Autonomous weapons are being developed by the armies of the world’s superpowers in partnership with industry. Drones have already been automated to the extent that they can take off and land, conduct surveillance missions and, at the behest of a human ‘pilot’

holding a controller often thousands of kilometres away, fire missiles.

“The UK’s Ministry of Defence said they could remove the human and replace it with a computer today,” says Walsh.

“That’s a technically small step.”

South Korea’s Sentry robot, developed by a subsidiary of Samsung, can be armed with a machine gun and grenade launcher and uses motion and heat sensors to detect targets up to three kilometres away. They have been installed along the border with North Korea.

China is pursuing autonomous weapons, but surprised the UN Summit when its delegation announced its “desire to negotiate and conclude” a new protocol for the Convention on Certain Conventional Weapons, “to ban the use of fully autonomous lethal weapons systems”.

“There’s still relatively little autonomy in the battlefield. But it is less than ten years away,” Walsh estimates.

The AI Forum has urged our own Government to consider taking a more prominent international leadership role in the push for a moratorium.

Walsh wants the Convention updated to ban use of lethal autonomous weapons, but China’s main military rivals, the U.S., Russia, Israel, France and the United Kingdom think it could stifle development of weapons capable of saving lives and reducing collateral damage. Walsh disagrees.

“We didn’t stop chemistry,” he says returning to the chemical weapons parallel.

“But we did decide it was morally unacceptable to use chemical weapons.”

Will algorithms help run your country?

AI is set to “revolutionise public service delivery,” claims the AI Forum report, which sees potential for the technology to help tackle social issues like poverty, inequality, clean up the environment and increase our standard of living.

But AI in Government is “currently disconnected and sparsely deployed”, the report finds.

“I wouldn’t go as far as saying that in government, the right hand doesn’t know what the left hand is doing, but no one has a full sense of what’s going on,” says Associate Professor Colin Gavaghan, director of the New Zealand Law Foundation Centre for Law and Policy in Emerging Technologies at the University of Otago.

That became clear last month when it emerged that Immigration New Zealand had, for the last 18 months, been running a pilot project modelling data such as the age, gender and ethnicity of overstayers, to identify which groups commit crimes and run up hospital bills.

It was news to immigration minister Iain Lees-Galloway, who only heard about it when approached by Radio New Zealand for comment. It had shades of the Ministry of Social Development controversy of 2015, when the agency angered its minister, Anne Tolley, by proposing a study to test its predictive risk modelling tool.

The study was to involve 60,000 children born in 2015 and try to predict child abuse, future welfare dependency and criminal activity. A group of the newborns would be given a risk rating and monitored over two years, without intervention in the high risk cases, to see if the ministry’s predictions were accurate.

“Not on my watch, these are children, not lab rats,” Tolley scribbled on the proposal, killing it dead.

“In both cases they responded by pulling the plug on the use of the algorithm,” says Gavaghan.

“Wouldn’t it be better if government knew what was happening in this regard at least within their own house?”

Overseas risk modelling programmes have run into problems with bias and accuracy, which would need to be tackled here early to avoid public trust evaporating.

“We aren’t comparing the algorithms with some fanciful perfect human decision maker. We think there’s a possibility that algorithms could do better. That’s what we should be demanding and making sure we get.”

Gavaghan and his colleagues have proposed setting up an oversight body to monitor the use of AI-based predictive tools, something that was also a key recommendation in the UK House of Lords report on AI released last month.

A key focus would be on transparency and the concept of “explainable AI”.

“If someone challenges the decision, about people being let out of prison, overstayers being made to leave the country, or ACC payments, if people think it is wrong or unfair, how transparent is the system in being able to explain the reason that led to the decision?”

Curran agrees formal oversight is needed.

“If you look across government, there would be at least a dozen agencies that are using artificial intelligence, whether it is ACC, the Department of Corrections, MPI or Customs, but its a bit of a wild west,” she says.

“The gap with the social investment agency and what happened with it having to be put on pause, was that there was no framework for data governance, how data should be shared, was the data identifiable, what were the ethical considerations that needed to be taken into account?”

She and James Shaw, the Minister of Statistics would work on the issue as a priority. In the meantime, she has formalised the Government’s relationship with Gavaghan and other researchers at the University of Otago, who are establishing the Centre for Artificial Intelligence and Public Policy.

It will advise the government on its use of AI in decision making and delivering services to citizens.

The still to be appointed Chief Technology Officer for the government, will develop a technology strategy for the country, in which AI will feature strongly says Curran. New Zealand is also working with the “D7” group of digitally progressive small and mid-sized nations to look at “algorithmic accountability, the impact of AI on digital rights, line of sight for personal data, and digital inclusion”.

WELCOME TO YOUR robot DOCTOR

Imagine yourself lying on an operating table as the anaesthetic starts to take hold. The last thing you see before fading out is the mechanical arm of STAR, the Smart Tissue Autonomous Robot, moving into position, its scalpel lowering over your abdomen.

The AI Forum report suggests autonomous surgical robots will be in action in two to four years. Already STAR and similar surgical robots are using machine learning and computer vision to give surgeons a run for their money when it comes to accuracy and precision in surgery – on certain tasks.

Surgical robots have been used in New Zealand hospitals since 2007, when Tauranga’s private Grace Hospital undertook the first robot-assisted operation using the da Vinci robot. Since then other private hospitals in Auckland and Christchurch have added da Vinci robots. In 2016, Southern Cross North Harbour Hospital carried out the country’s first robot-assisted throat cancer operation, removing a tumour by extending a robotic cutting tool into the patient’s mouth.

The robots are mainly used for laparoscopic or keyhole surgery, entering the body through small incisions and have been used to perform prostatectomies for prostate cancer, bladder procedures and abdominal operations. But there is always a human hand on da Vinci’s controls. A surgeon, like New Zealand’s top robo-surgeon, Professor Peter Gilling, sitting at a computer console guides the robot’s every move.

The advantage is greater precision, quicker operations, smaller scars and faster recovery, which is why private hospitals have employed them first to gain a competitive edge. Robots like STAR will take things a step further. In a trial completed last year, the robot was given the task of suturing together parts of a pig’s bowel. STAR did so with greater accuracy than experienced surgeons.

Where machines will could take over entirely in healthcare is where they play to the current strength of artificial intelligence – in analysing large amounts of data to detect patterns and make predictions.

Google’s Dr Lily Peng was working on a project looking at the health-related queries the company’s search engine returned when she began exploring if Google’s advances in machine learning and computer vision could be applied to medicine.

“I thought, if we can detect dog breeds in images, maybe we can help with medical images as well,” she says.

She formed a team to look at diabetic retinopathy, a disease that leads to blindness if left untreated. Eye specialists take photos of a diabetes patients’ retina to look for tiny aneurysms. Peng and colleagues hired 54 doctors to make diagnoses based on the photos. Then they fed 130,000 such images into Google’s TensorFlow machine learning system to teach it.

“We very closely matched the performance of the eye doctors, our algorithm is just a little bit better than the median ophthalmologists,” says Peng.

The software is now undergoing clinical validation in Thailand and India, where there is a massive shortage of eye doctors.

“In some parts of the world, there simply aren’t enough specialists to do this task,” says Peng.

“In India, 45 per cent of diabetes patients suffer some sort of vision loss before they are diagnosed. It’s entirely preventable.”

She says the tools can be applied to all sorts of medical imagery, including cancer biopsy images, where trials have shown Google’s algorithms can beat expert pathologists in picking breast cancers in images.

The big AI-driven revolution in health will be the advent of personalised medicine, says Toby Walsh.

“There’s an arms race coming in health. We’ll be able to personalise medicine, combine AI with what we are starting to know about genetics on a personal level to tackle many of the diseases that afflict us,” he says.

“The risk is that we’ll end up like in the digital space where we don’t own that data. If Fitbit discover a way to predict heart attacks they could sell back that diagnostic tool to you at great cost because you want to know if you are going to have a heart attack.”

Will you ride in a driverless car?

Uber’s fatal self-driving car crash in March, where a Volvo car equipped with sensors failed to detect Elaine Herzberg who was walking her bicycle across a road at night in Tempe, Arizona, suggests driverless cars still need further development.

But most experts strongly agree that the technology is close to ready and that it will save lives and taxpayer dollars spent on cleaning up accidents.

“It will be superhuman at driving,” says Walsh. It has better sensors than you – lidar, radar, a better vision system, it won’t be distracted by the radio, it won’t be texting or drunk. It will never get lost and always know what the speed limit it.

“The accident rate will get close to zero.”

Michael Cameron, a solicitor at the Department of Corrections has been researching driverless cars as the Law Foundation’s International Research Fellow.

He has proposed eight key changes to the law that will be required to make sure autonomous vehicles can be tested and used legally on New Zealand roads, including clarifying that the law doesn’t require a driver to be behind the steering wheel.

“The technology is invented already. If we really want it to happen we need a receptive legal environment. ” he says.

There will still be occasional crashes as driverless cars take to the roads, but Cameron’s research suggests the evidence for eliminating traffic accidents and reducing traffic congestion is strong when AI replaces human decision making.

“If it can reduce the numbers of deaths and injuries on the roads, then that is to be welcomed,” Gavaghan says.

“We shouldn’t be settling for just a bit better, if we can make it much better.”

You must be logged in to post a comment.